Abstract

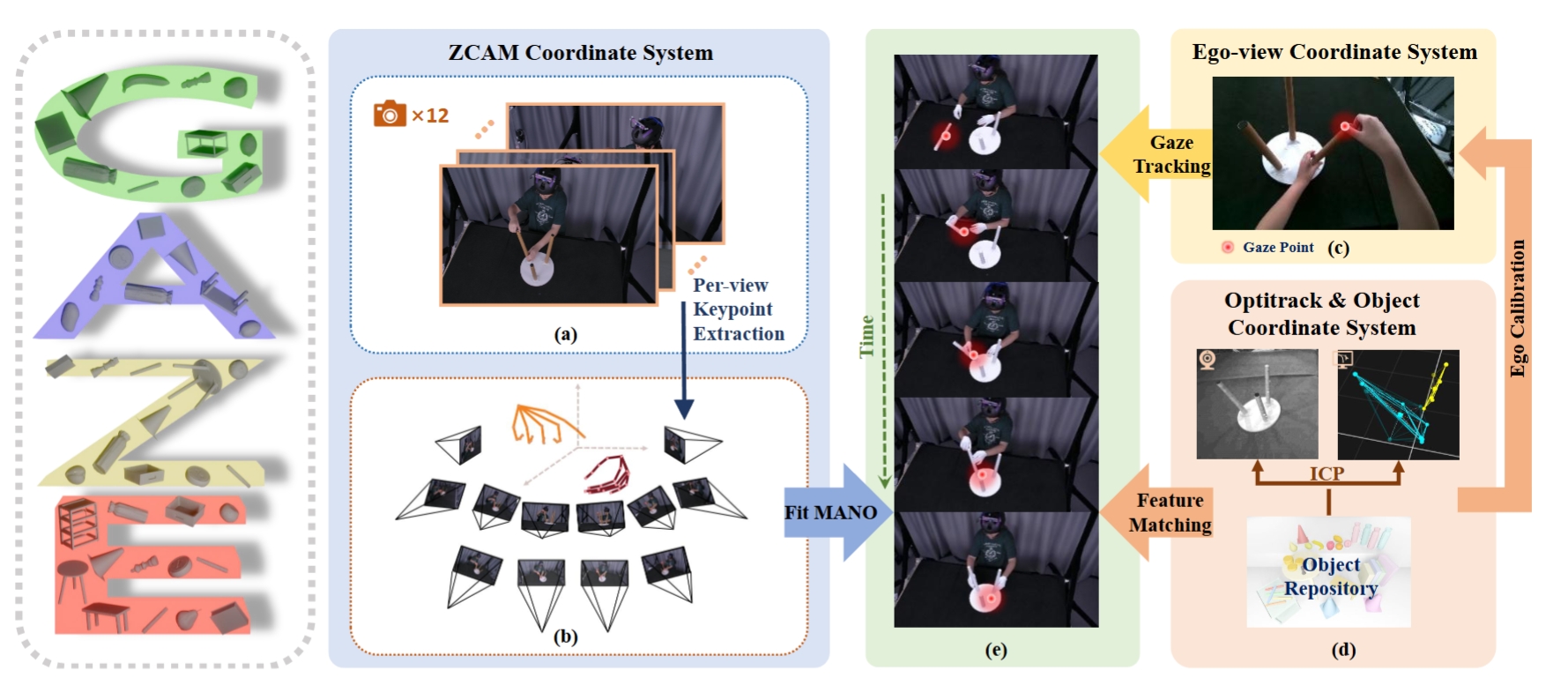

Gaze plays a crucial role in revealing human attention and intention, particularly in hand-object interaction scenarios, where it guides and synchronizes complex tasks that require precise coordination between the brain, hand, and object. Motivated by this, we introduce a novel task: Gaze-Guided Hand-Object Interaction Synthesis, with potential applications in augmented reality, virtual reality, and assistive technologies. To support this task, we present GazeHOI, the first dataset to capture simultaneous 3D modeling of gaze, hand, and object interactions. This task poses significant challenges due to the inherent sparsity and noise in gaze data, as well as the need for high consistency and physical plausibility in generating hand and object motions. To tackle these issues, we propose a stacked gaze-guided hand-object interaction diffusion model, named GHO-Diffusion. The stacked design effectively reduces the complexity of motion generation. We also introduce HOI-Manifold Guidance during the sampling stage of GHO-Diffusion, enabling fine-grained control over generated motions while maintaining the data manifold. Additionally, we propose a spatial-temporal gaze feature encoding for the diffusion condition and select diffusion results based on consistency scores between gaze-contact maps and gaze-interaction trajectories. Extensive experiments highlight the effectiveness of our method and the unique contributions of our dataset.

Overview

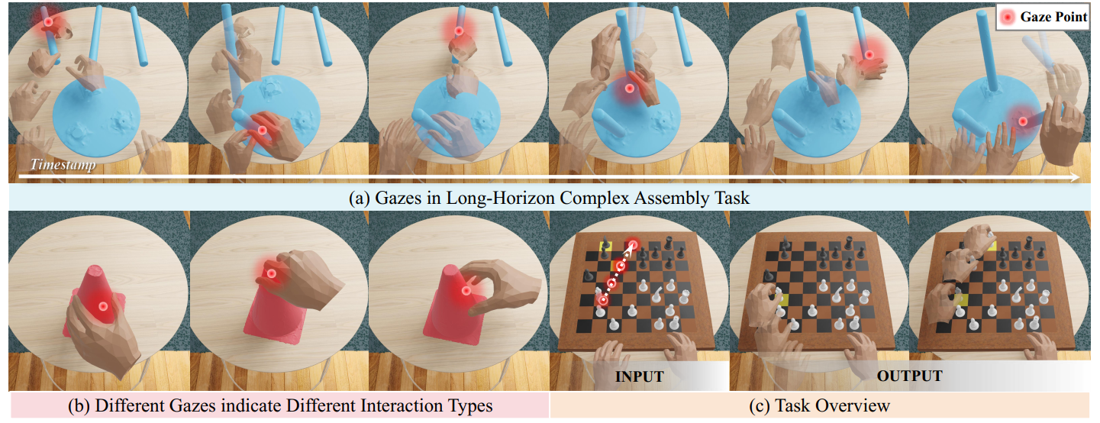

Gaze plays a crucial role in hand-object interaction tasks. The subfigure (a) illustrates how gaze helps coordinate the hands, objects, and brain during complex table assembly. The subfigure (b) shows how gaze affects attention allocation and grasp strategies. Building on these observations, we introduce the gaze-guided hand-object interaction synthesis task, as depicted in the subfigure (c), which uses gaze data as input to generate hand-object interactions that align with human intentions.

Dataset

Video

BibTeX

@misc{GazeHOI,

title={Gaze-guided Hand-Object Interaction Synthesis: Dataset and Method},

author={Jie Tian and Ran Ji and Lingxiao Yang and Suting Ni and Yuexin Ma and Lan Xu and Jingyi Yu and Ye Shi and Jingya Wang},

year={2024},

eprint={2403.16169},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2403.16169},

}